The group's research focuses on cognitive en affective neuroscience of intersensory perception, between different sensory systems, primarily on the interaction between seeing and hearing and on how emotion and cognition interact in humans. Behavioural and neurofunctional approaches (ERPs, fMRI, MEG and TMS) are used in an integrated fashion.

Rare neuropsychological disorders (e.g. prosopagnosia) and more commonly-occurring neurological disorders (e.g. autism, unilateral neglect, Huntington’s disease and schizophrenia) are investigated and compared with patterns observed in normal and neurologically intact cognition.

Four main strands of interest encompass de Gelder's research activity:

1. Non-conscious recognition in patients with cortical damage.

The group of de Gelder has carried out novel research on the ability of patients with striate cortex lesions to identify the emotional meaning of visual stimuli of which they are not aware. Such non-conscious recognition was hitherto not deemed possible in these patients. Her group has also recently developed a new, indirect methodology for studying non-conscious recognition of facial expressions (see Nature Reviews Neuroscience, 2010, and also, PNAS, 2002; 2003; 2005; 2009).

2. Emotional expression in whole bodies.

The computer crashes. What do we do? Self-consciously scratch our heads, fruitlessly fiddle with the computer, tear our hair and nervously bite our lips. Even though we don't utter a single word, anybody watching would know exactly what's going on inside. Our body language is part of us. Because emotions, gestures and facial expressions are linked up in the brain, even people who were born deaf and blind will turn down the corners of their mouths to express sadness and smile to show that they are happy (see Nature Reviews Neuroscience, 2006).

3. Face recognition and its deficits.

de Gelder and her research team have carried out a wide variety of studies in this area. The most important finding to date has been that prosopagnosics’ face identification performance was improved by inversion of face stimuli (the opposite is true for normal subjects). The theoretical implications of this paradoxical “inversion superiority” phenomenon in these patients have been incorporated into a new theory of face processing (see PNAS, 2003).

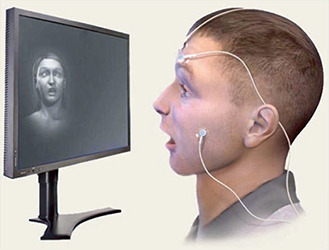

4. Multisensory perception and the interaction between auditory and visual processes.

Cross-modal integration in speech perception, audio-visual localisation and the perception of affect are all investigated. The latter research concerns the interaction between identification of the emotional expression portrayed in the face simultaneously with the tone of voice in which sentences are spoken (see TICS, 2003).